2주차에는 Convolutional neural network와, computer vision tasks에 대해 공부했습니다.

Convolution

▶ Image 데이터에 대해 Fully Connected layer를 사용할 경우,

- Flattening image and fully connecting every node : Too many parameters.

- Only few regions are important in telling what is in the image.

- FC cannot understand the image as the same, if slight translation is made.

- Translation invariance is what we need for computer vision task : for we augment the data by image translation.

파라미터가 너무 많아지고 2-dim image data의 공간적 특성 및 local feature를 제대로 추출하기 어렵습니다.

▶ convolution시 padding, striding을 적용합니다.

- Padding ; Add values (usually 0) to the borders of the image : Solves the problem of filters running out of image. : Used to make the size of the output same as the input.

- Striding ; Jump across some location : Subsample the image.

▶ Shape

▶ Matrix

▶ Increasing the receptive field

- Conv layer를 쌓음으로써 receptive field 크기를 키울 수 있습니다.

- 하나의 큰 filter 보다 더 성능이 좋습니다.

- Dilated filter -

▶ Decreasing the size of tensor

- Pooling : Subsample the image by averaging or taking max of region. -> 최근에는 다른 방법을 많이 사용합니다.

Convnet architectures

ILSVRC (ImageNet Large Scale Visual Recognition Challenge) 에서 우승한 SOTA model들을 살펴봅시다^

CV task에서 baseline 아키텍쳐로 사용되는 LeNet-like 구조입니다.

▶ AlexNet

▶ VGG

▶ GoogLeNet

- As deep as VGG, but has only 3% of the parameters!

- No fully-connected layers

- Stack of Inception Modules.

Instead of picking one, compute through all of them, and concatenate the results.

Follows hypothesis that cross-channel correlations and spatial correlations are decoupled and can be mapped separately.

▶ ResNet

- Problem : Deep models should perform better than shallow network, but don't due to vanishing gradient.

-> Solution : SImply skip layers such that if the gradient vanishes, no harm is done.

- Increase number of filters with depth

✔ Instead of max-pooling, down-sample spatially using strides! - Variants of ResNet

DenseNet ; Add more skip connections

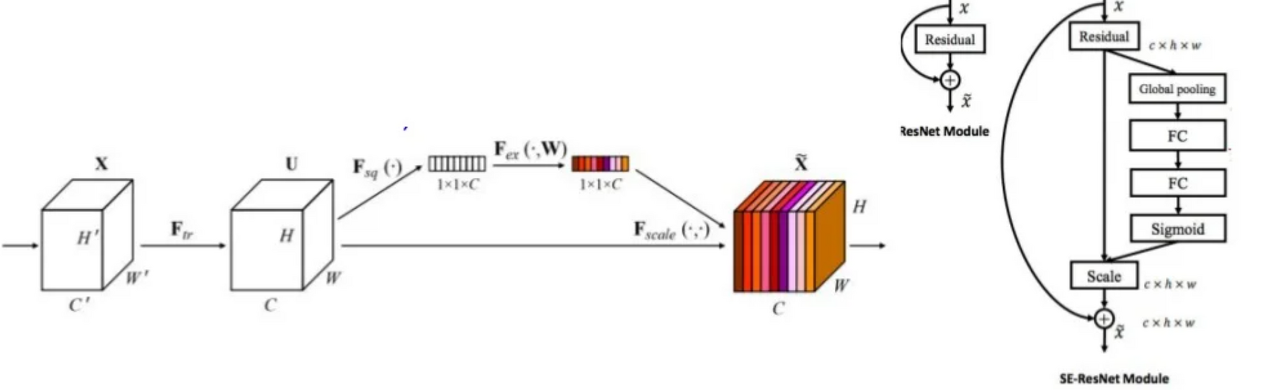

▶ SENet

Add a module of global pooling & FC layer to adaptively reweight feature output maps.

The network choose what to use : Act like attention mechanism...

▶ SqueezeNet

AlexNet level accuracy with 50x fewer parameters & < 0.5MB model,

through constant 1x1 bottlenecking (= squeeze)

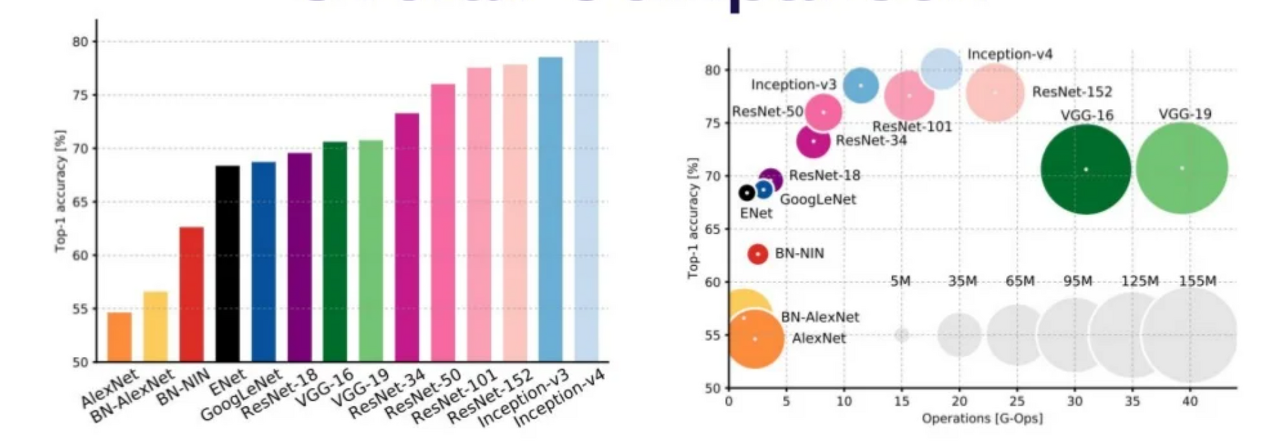

▶ 모델 비교

Some ResNets, or Inception-v4 (Inception+ResNet) 가 accuracy, memory operation 모두에서 좋은 성능을 보입니다.

Computer Vision Tasks

classification task 외에도, Localization, Detection, Segmentation 등의 다양한 테스크를 해결합니다.

Region Proposal Methods

image의 모든 부분을 보기에는 computation cost가 크니, region of interest만 봅니다.

- R-CNN Region Proposal (slow external method : non- deep learning method) and apply AlexNet in ROI

- Faster R-CNN

- Insert RPN (Region Proposal Network)

- Everything is in-network -> fast

- Mask R-CNN

- Also go through an instance segmentation module.

Advanced tasks

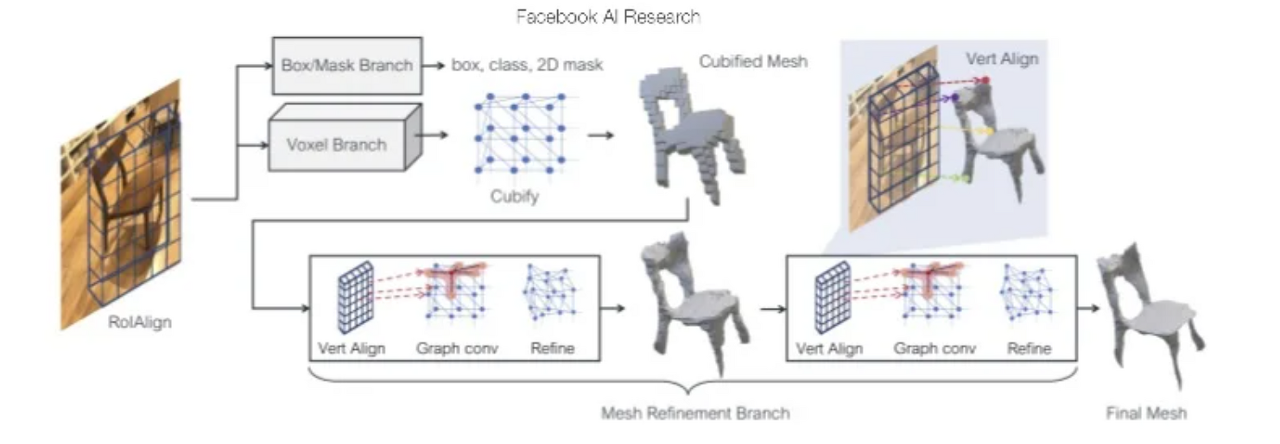

3D shape inference

Given a 2D image, detect objects and predict their 3D meshes. Mesh R-CNN

shapenet 데이터셋을 통해 학습할 수 있습니다.

그 외에도 Face landmark recognition, Pose estimation Detection 등의 다양한 테스크를 실행할 수 있습니다.

'3-2기 스터디 > MLOps' 카테고리의 다른 글

| [7주차] Troubleshooting (0) | 2022.06.21 |

|---|---|

| [5주차] ML Projects (0) | 2022.05.31 |

| [4주차] Transformers (0) | 2022.05.17 |

| [3주차] RNNs (0) | 2022.05.10 |

| [1주차] Fundamentals (0) | 2022.04.08 |

댓글